The build-system consists of several layers: there is the part which builds the rpms and assists the buildmaster. Currently, mach is the only alternative for this task. Then, there is the part which ensures the security. This one has several, mutually exclusive alternatives: vserver, SELinux, UML and QEMU. Because of the introduced restrictions, most of these components will need special helper. For vserver, this is vserver-djinni. And last but not least, there is needed something which glues the components together and provides a frontend to them.

The current fedora.us buildserver has a working mach & vserver & vserver-djinni setup.

mach is a project founded by Thomas Vander Stichele, stands for ``Make A CHroot'' and can be found at Sourceforge. It prepares a minimal chroot-environment for package-builds, installs needed buildrequirements and executes the build itself. There are other features also like easy configuration of the build-tree (e.g. RHL9, FC1, fedora.us stable,...) or collecting of build-results (binary/source rpms, buildlogs), so that mach became an essential part of the fedora.us buildsystem.

Problems:

- since usual chroots are not secure, additional layers are needed to provide the security aspects.

- mach does some operations like mounting the /proc filesystem into the chroot, which might collide with the security setup.

- the current mach version (0.4.2) fails to resolve conditionalized BuildRequires: and such ones which are pathnames (e.g. BuildRequires: /usr/include/db.h)

Vserver-Technology provides perfect process-separation, unbreakable chroots, is very lightweighted and has nearly no performance impact. Large parts of its security is based on linux capabilities, and the usage of network-resources can be restricted by setting the allowed interfaces/IPs and filtering it with external tools like iptables or iproute.

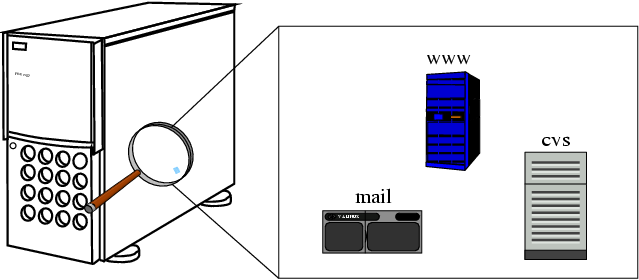

Vservers are identified by contexts; a process can see processes of the same context only. The setup is very easy and simple: each vserver appears as a standalone machine and is configured as a such one. There are existing extensions which are allowing context-specific disk-quotas. Figure 2, “A typical vserver setup” illustrates a typical vserver setup.

For the fedora.us buildsystem, the package-building happens in a separate vserver.

Figure 2. A typical vserver setup

[root@cvs]# ps ax

PID TTY STAT TIME COMMAND

1 ? S 0:04 init

26930 ? S 0:00 /sbin/svlogd -t ./main/main ./main/auth ./main/debug

26935 ? S 0:00 /sbin/socklog unix /dev/log

26979 ? S 0:00 tcpserver-sshd ... 22 /usr/sbin/sshd -i

27024 ? S 0:05 crond

27050 ? S 0:00 tcpserver-cvs ... 2401 ... /usr/local/bin/cvswrap

682 pts/2 S 0:00 /bin/bash -login

728 pts/2 R 0:00 ps ax

[root@cvs]#

[root@www]# ps ax

1 ? S 0:04 init [3] --init

12865 ? S 0:00 /sbin/svlogd -t ./main/main ./main/auth ./main/debug

12866 ? S 0:00 /sbin/socklog unix /dev/log

12869 ? S 0:00 tcpserver-sshd -c 80 -q -l 0 ... 22 /usr/sbin/sshd -i

12876 ? S 0:00 /usr/sbin/crond

12877 ? S 0:00 /usr/sbin/httpd

13647 ? S 0:03 /usr/sbin/httpd

13651 ? S 0:03 /usr/sbin/httpd

705 pts/0 S 0:00 /bin/bash -login

708 pts/0 R 0:00 ps ax

[root@www]#

[root@mail]# ps ax

1 ? S 0:04 init [3] --init

19811 ? S 0:00 /sbin/svlogd -t ./main/main ./main/auth ./main/debug

19812 ? S 0:00 /sbin/socklog unix /dev/log

19815 ? S 0:00 tcpserver-sshd -c 80 -q -l 0 ... 22 /usr/sbin/sshd -i

19818 ? S 0:00 /usr/sbin/crond

27020 ? S 0:00 milter -O /var/lib/milter/default.py

27021 ? S 0:00 milter -O /var/lib/milter/default.py

27022 ? S 0:00 milter -O /var/lib/milter/default.py

28254 ? S 0:00 sendmail: accepting connections

28262 ? S 0:00 sendmail: Queue runner@01:00:00 for /var/spool/...

765 pts/0 S 0:00 /bin/bash -login

783 pts/0 R 0:00 ps ax

[root@mail]#

Problems:

- vserver is an unofficial kernel-patch only and will not be in the kernel till 2.7/3.0. It conflicts with selinux which is the preferred technology of Red Hat. There does not exist a patch for current RHL9/FC1/RHEL3 kernels yet; vanilla 2.4.x (without NPTL and exec-shield) is required. Therefore, vserver based buildsystem can not be selfhosted by Fedora software.

- these changed environment can cause problems: db4 of RHL9/FC1 does not work on non-NPTL kernels, some packages (e.g. MIT-scheme) require exec-stack and may work well on the build-machine, but fail on real FC1 installations. The marking of pre-FC1 binaries and disabling exec-shield on them will not work, since the build-environment is FC1.

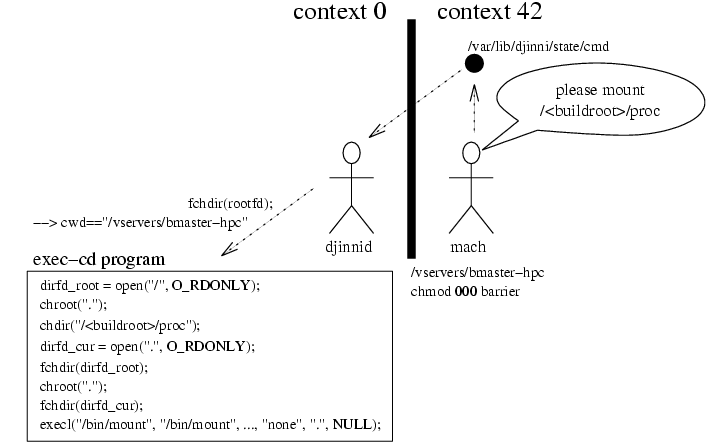

- because the build happens in a vserver with reduced capabilities, mach can not mount /proc into the buildroot natively, but requires some kind of helper. Similarly for creating /dev entries.

the safe chroot(2) is a big, but working hack: contextes can not enter directories with 000 permissions.

if ((mode & 0777) == 0 && S_ISDIR(mode) && current->s_context != 0) return -EACCES;

How SELinux will/can fulfill the requirements was not explored yet. Restricting of network-resources is supported by SELinux

Open questions:

- SELinux can protect foreign processes. But is it possible to hide them in /proc also?

- Is chroot(2) implemented in a safe manner? Or, can parent directories of build-roots be protected with SELinux policies? Is a safe chroot(2) required at all?

- What is the performance impact of the policy checking?

- How can disk/memory usage restricted with SELinux? Would CKRM[2] be an option?

- Can special mount-operations (e.g. /proc filesystem) be allowed by the policy, or does this require userspace helper also?

- Setup of an SELinux policy seems to be very complicated. How possible are holes in a setup?

UML is similarly to vserver but emulates an entire Linux system. It is more heavyweighted, has a more difficult setup and has a performance impact, but offers interesting features like a copy-on-write filesystem which is missing on vserver. It does not require a special host-kernel, so that its chances to come into RHEL/Fedora are much higher than vserver's one.

Since UML provides nearly a full featured Linux environment, /proc mounting or device creation would not need userspace helpers.

QEMU and Bochs are emulating a complete machine. They are very heavyweighted -- qemu slows down compilation in a factor of 2-4[3] -- are available for common architectures (IA32) only. Therefore, they are not really an option.

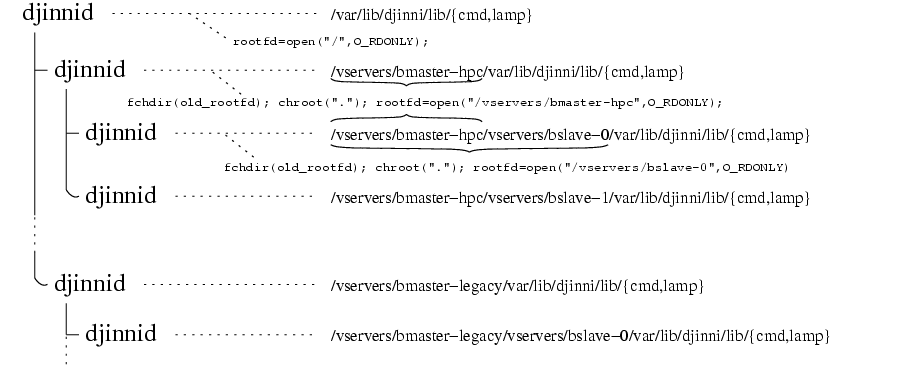

vserver-djinni is used to do privileged tasks like directory mounting in unprivileged vservers. To do this, a djinnid daemon is running in the privileged host-ctx and listens on commands from the vservers. One of djinni's designgoals was to enable a vserver-in-vserver functionality which is not doable with current vserver patch.

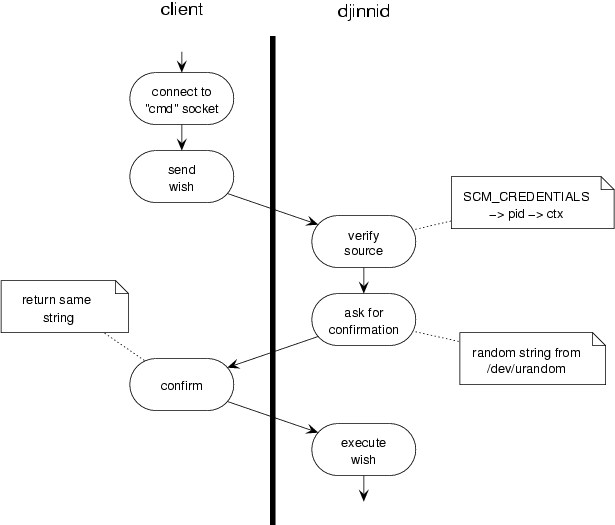

Beside the command-socket which is used to transmit commands from client to djinni-daemon, there is a second watchdog socket. Before doing any other operation, a ``djinni-rub'' daemon must connect to this watchdog socket and must keep it open till the last operation. One this sockets gets closed (e.g. by killing the ``djinni-rub'' daemon) there is no way to re-enable the command socket from within the vserver.

Each djinni process is assigned to exactly one vserver and there are two kinds of commands for djinnid: these ones which are executing a single command and those, which are creating a new djinnid process for a vserver. When executing a single command, this command starts with having the vserver directory as its current working directory. With careful choosing of the following commands, symlink attacks from within the vserver can be prevented effectively.

The other kind of command creates the new sockets within a vserver environment and starts a new djinni process listening on them (command- and watchdog-socket). Upon startup of this new daemon, the top-directory of the new vserver will be entered in a secure way and its filedescriptor internally stored and used on subsequent operations.

One djinnid serves exactly one master which is identified through its uid/gid attributes and its context. The context is determined by the pid of the master-process which is transmitted through SCM_CREDENTIALS messages on the command-socket. Once such a message was transmitted to a newly created djinnid, all subsequent messages must have this origin (uid + ctx). To prevent certain kinds of attacks[4] an additional confirmation step is needed in the communication.

vserver-djinni is configured through a an hierarchical filesystem structure in /etc/djinni.d. Each file there which does not begin with a period means a command which can be sent to djinnid. Files beginning with a dot are marking special attributes of the vservers; such attributes are:

- .run

- The command which will be executed; this file must be executable.

- .params

- A syntaxdescription of allowed parameters; e.g. ``p'' for a path, ``v'' for a vservername, ``[...]'' for a set of possible values and so on.

- .new

- When this file exists, the command will be used to create a new vserver. The content of this directory configures the command-set of the new djinnid.

- .trusted

- When this file exists for current and all parent-configurations, the vserver will be assumed as trusted. Starting an untrusted vserver within an untrusted vserver is not supported.

Example 1. Sample djinni.d configuration

/etc/djinni.d

|-- .trusted

`-- new_bmaster

|-- .new

|-- .params

|-- .trusted

|-- new_bslave

| |-- .new

| |-- .params

| |-- .run

| |-- prepare_machroot

| | |-- .params

| | `-- .run

| `-- shutdown_machroot

| |-- .params

| `-- .run

`-- stop_bslave

|-- .params

`-- .run

[4] Attacker from other ctx gives command and terminates the process immediately. Now he enforces process creation in the authorized context (e.g. ssh-login as ordinary user) and speculates on a race between ``send wish'' and ``verify source''.